Teaching AI New Tricks: How MCP Bridges Chatbots and Real Data

When you think of AI nowadays it is Chatbots and Vibe Coding. Ask a question, get an answer and sometimes right, sometimes not. But when you need the LLM to access certain systems and need precise outputs AI needs help. Sometime a lot of help.

Recently something called the Model Context Protocol (MCP) was created to solve this problem. It’s like giving your AI Chatbot a precise roadmap and a fixed toolbox so it knows exactly what data sources are available, and what format to deliver answers in. Once you have that, AI stops guessing (and making things up) and starts giving you reliable, consistent output.

What is MCP (Model Context Protocol)?

MCP is an open standard that lets AI chat tools (like Claude, or whatever you might already be using) connect with external data sources and tools in a standard way. Think of it as giving your AI a menu of explicit “endpoints” (APIs or functions) it can call — and telling it:

- How to call them (what parameters to pass)

- What they return (fields, types, formats)

Once the AI knows what it has access too, it becomes much more predictable.

APIs

An API (Application Programming Interface) is just a way two pieces of software talk to each other. You send a request like “Give me data about X” and it sends back structured data you can use. Think of it as ordering off a menu. You tell the waiter (API) exactly what entree you want (endpoint + parameters), and they brings back what you asked for (most of the time).

APIs often return a lot of data. That’s both a blessing and a curse. If you don’t tell your AI what to focus on, it may hand you everything or miss the part you wanted. MCP lets you define exactly which fields matter and how they should be presented.

A Fun Example: Snorlax from PokeAPI

PokeAPI is a free data service for Pokémon. If you access the Pokemon endpoint and provide Snorlax as the parameter, you get back a huge JSON payload: stats, abilities, types, moves, images, even game version details.

Try it here to see just how much data is available (it just displays data in the browser window) https://pokeapi.co/api/v2/pokemon/snorlax

If you followed the link we can see why we need AI to read it all. With MCP, you can teach your chosen Chatbot:

- “Here’s the endpoint to get a Pokémon.”

- “These are the fields I care about (name, type, base stats).”

- “Here’s the format I expect in the answer.”

Now when you ask “Show me Snorlax’s stats,” your AI calls the endpoint correctly, extracts only what you defined (maybe just HP, Attack, Defense), and delivers it in a clean, consistent table.

A Business Example

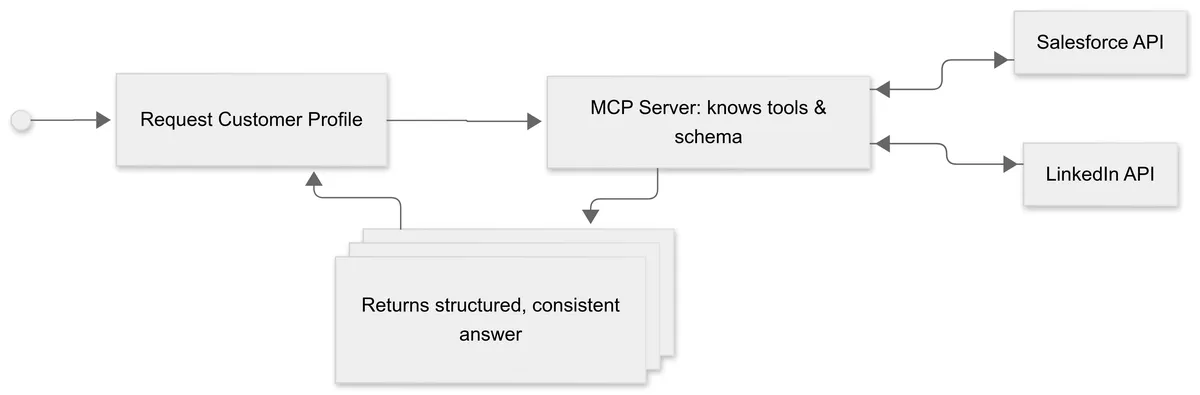

Imagine you want to create a customer profile by pulling data from two systems:

- Salesforce.com API: gives you contact info, or search across your CRM.

- LinkedIn API: gives you company details, recent job changes, and public professional data.

Both APIs could return a ton of data. With MCP, you could:

- Define a tool called

get_customer_profile(customer_id)with this schema:- Inputs: Salesforce customer ID

- Process:

- Call Salesforce API to get core CRM data (Name, Email, Open Opportunities)

- Call LinkedIn API to enrich the profile with the specific fields

- Output: A single JSON object like this:

{

"customer_name": "Acme Corp",

"contact": {

"name": "John Smith",

"email": "john.smith@acme.com",

"title": "Chief Financial Officer"

},

"salesforce": {

"open_opportunities": 3,

"nps_score": 75,

"recent_cases": [

{

"case_id": "12345",

"status": "Open",

"created_date": "2025-09-01"

}

]

},

"linkedin": {

"company_name": "Acme Corporation",

"website": "https://www.acme.com",

"industry": "Manufacturing",

"employee_count_range": "201-500",

"follower_count": 125000

}

}

Tell the AI: “Always return this schema.” Now when you or your team ask “Show me a customer profile for Acme Corp,” it knows exactly which APIs to call, what to pull, and how to present it. You get a reliable, repeatable answer you can drop into a CRM note, a report, or even a Power BI dashboard.

Why This Matters for Finance Professionals

Most finance professionals are not coding but you’re working with data all the time. LLMs can be a powerful tool to work with data such as metrics, forecasts, KPIs, and business plans. But only if they know where to look and how to work with the data that is returned.

An MCP lets you:

- Turn a generalist into a specialist

- Teach the AI exactly what each tool does and what the output looks like

- Enforce consistent output (so you can dump it into Excel, dashboards, or workflows)

- Reduce risk of bad answers by eliminating guesswork

What I Built

Inspired by NetworkChuck’s video and his tutorial repo, I built my own MCP server for PokéAPI: pokeapi-mcp-server.

It’s a small Python service run in Docker. Docker has a free MCP Toolkit so you can run the server on your computer. It creates a tool so AI can:

get_pokemon(name_or_id)– fetches Pokémon dataget_species(name_or_id)– gets flavor text, evolution infoget_evolution_chain(name_or_id)– shows evolution pathssearch_by_type(type_name)– lists Pokémon by type

Because MCP defines the schema, Claude (or another LLM) knows exactly how to call each tool and what the results look like. You can do the same with any API you have access to — Salesforce, your internal finance system, even public data like SEC filings or economic indicators.

LLMs can already chat about anything. MCP lets you teach them to work with your data reliably. Whether it’s Snorlax stats from PokéAPI or customer profiles from Salesforce + LinkedIn, the pattern is the same:

- Define the tools (endpoints + schemas).

- Teach the AI what’s available.

- Enforce consistent output so you can trust the results.

If you’re a finance professional curious about AI, MCP is a powerful way to make large language models specialized, reliable, and useful for your own datasets. It’s the bridge between the chat interface you already know and the precision you need.